John Searle has a thought experiment of Chinese room which got AI world off guard in 1980. It is still lingering in the AI sphere. In its core, it states that even if a computer (or a person who does not understand Chinese) in a Chinese room to translate Chinese, say, into English, has past Turing test, the computer (or the person) still does not understand and consciously translate the language.

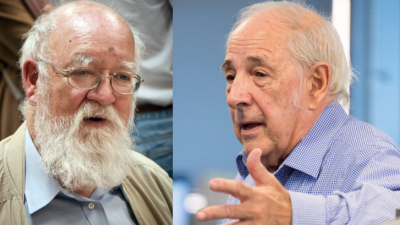

Daniel Dennett rebukes the argument and states that the Chinese room is an “intuition pump”. Dennett has argued that the person in a Chinese room has to have memory, recall, emotion, world knowledge, and rationality to do his job.

I am with Searle for this one, even if both are my favorite philosophers.

No, you do not need memory, recall, emotion, world knowledge, and rationality to shuffle the words if you have a good “shuffling manual” in the Chinese room. Ok, you have to have some knowledge to understand the manual.

Sadly more than 40 later, GPT3 or ChatGPT is doing the same thing, i.e. shuffling words with help of a thick thick manual (i.e. Internet). We have indeed expended the Chinese-room idea. We have now “painting room” to shuffle colors to make AI art works in Da Vinci or Turner style, “Music room” to shuffle musical notes and so on. We do get fantastic outcome from these “rooms”. However, we feel definetely something is missing and hope someone is working on it!

Leave A Comment